Welcome to the Light Dark Matter eXperiment! This is the central page for documenting our software. Here, you will find manually written documents as well as links to other manuals related to the software. This site is generated using mdbook which has a helpful page on how to read a site formatted like this. All of the pages are hosted here except for the pages in the Reference section which are links to the manuals hosted at different sites.

Below is a list of past video tutorials that are available online. Some of them may be out of date, but they can still be helpful in defining what kind of vocabulary we often use. The first section of this manual is text-based information about how to get started using (and eventually developing) ldmx-sw.

- ldmx-sw v2.0.0 tutorial May 2020

- ldmx-sw v3.0.0 tutorials (links to session recordings included)

This manual is separated into two main sections based on how you are interacting with ldmx-sw.

- Using is when you are running ldmx-sw with fire and are not actively changing code within ldmx-sw (i.e. you can fix the version of ldmx-sw you are using for your work).

- Developing is when you are actively changing code within ldmx-sw and so need to compile ldmx-sw yourself.

Notice that this categorization only cares about the ldmx-sw source code. People using ldmx-sw very often are still developing code that may just not be within ldmx-sw. Additionally, whether or not you are developing ldmx-sw may change depending on the project you are working on. Perhaps you find a bug or think of a new feature -- contributions are encouraged and welcome!

Getting Started

This guide is focused on first-time users of ldmx-sw. If you are interested in contributing to ldmx-sw, please look at the getting started developing guide for help starting to develop ldmx-sw.

ldmx-sw is a large software project that builds on top of other large and complicated software projects. For this reason, we have chosen to use containers to share fixed software environments allowing all of us to know exactly what version of ldmx-sw (and its underlying packages) is being used.

Each of the steps below is really short but more detail has been added in order to help users debug any issues they may encounter. If all goes well, you will be able to fly through these instructions within 5-10 minutes (depending on how long software installations take). Each of the sections has a "Comments" subsection for extra details and a "Test" subsection allowing you to verify that you completed that step successfully.

While many new ldmx-sw users may be unfamiliar with the terminal, explaining its use is beyond the scope of this site. If you are unfamiliar with the terminal, a helpful resource is linuxcommand.org and there are many others available online since terminals are a common tool utilized by software developers.

Windows Comments

- If you are running on a Microsoft Windows system, it is necessary for you to do all of the steps below within Windows Subsystem for Linux (WSL). The permissions system that docker relies on in order to effectively run the containers is not supported by Windoze. (While GitBash and the Command Prompt can look similar to other terminals, make sure to open a WSL terminal --- often labeled "Ubuntu").

- As of this writing, you cannot use a VPN and connect to the internet from within WSL

- The "docker daemon" needs to be running. On most systems, this program starts automatically when the computer is booted. You can check if the docker daemon is running by trying to run a simple docker container. If it is not running, you will need to start it manually.

- Docker Desktop outside WSL needs to be running to be able to use docker inside WSL? (question mark because unsure)

Install a Container Runner

Currently, we use denv to help shorten and unify container interaction across

a few different runners, so we only require a container runner supported by denv.

On personal laptops, most users will find it easiest to install the docker engine.

apptainer should already be installed on shared computing clusters.1

- If you are on Windows, make sure to use the WSL2 backend for docker.

- On Linux systems, make sure to manage docker as non-root user

so that you can run that command without

sudo. - If you choose to use

podman, make sure to update theregistries.confconfiguration file in order to allow forpodmanto pull from DockerHub.

You can run a simple container in whichever runner is available.

docker run hello-world

or

apptainer run docker://ghcr.io/apptainer/lolcow

This may be the first point where you enter into a terminal unless your installation was terminal-based. If you are using Windows, remember to go into Windows Subsystem for Linux rather than other Windows terminals like GitBash or Command Prompt.

For those who've been using containers (with or without LDMX) for awhile,

the program singularity may sound familiar.

apptainer is just a new brand name for singularity after it was adopted

by the Linux foundation.

Many apptainer installations also come with a wrapper program

called singularity for backwards compatibility.

Install denv

For most users the following command will install the latest version of denv

curl -s https://raw.githubusercontent.com/tomeichlersmith/denv/main/install | sh

If it doesn't work follow these instructions for installing denv.

They are short but require you to be in the terminal.

- Remember to install

denvwithin WSL if you are using Windows. - While

denv's install script tries to help you update yourPATHvariable, it may not be perfect. You may need to consult the documentation for your shell on how to add new directories to thePATHvariable so that thedenvcommand can be found by your shell.

Replicating the Legacy ldmx Command

Replicating the Legacy ldmx Command

Some folks want to replicate the ldmx bash function which preceeded denv.

To do this, you can create a few symlinks.

The examples below are for the default installation prefix for denv

(~/.local), but you can replace this directory with whereever you chose

to install denv when running the curl ... install command.

# for the command

ln -s ~/.local/bin/denv ~/.local/bin/ldmx

# and for the tab completion

ln -s ~/.local/share/bash-completion/completions/denv \

~/.local/share/bash-completion/completions/ldmx

You should be able to have denv run its self-check that also

ensures you have a supported container runner installed.

denv check

Example output would look like

Entrypoint found alongside denv

Looking for apptainer... not found

Looking for singularity... not found

Looking for podman... not found

Looking for docker... found 'Docker version 27.0.3, build 7d4bcd8' <- use without DENV_RUNNER defined

denv would run with 'docker'

Set Up Workspace

Whatever your project is, it will be helpful to have a directory on your computer that holds the files related to this project. If you haven't already made a directory for this purpose, now is the time! This is also where we will choose the version of ldmx-sw to use.

# create a directory for the project

mkdir -p ldmx/my-project

# go into that directory

cd ldmx/my-project

# initialize a new denv, defining the ldmx-sw version

denv init ldmx/pro:v4.0.1

- The specific location of the

my-projectdirectory does not matter. (As long as its within WSL if you're on Windows of course!) - The version of ldmx-sw listed here is just an example, changing the version later can be done with

denv config image ldmx/pro:<ldmx-sw-version>if desired.

The denv init command should not be run from within ldmx-sw.

This could lead to a confusing state where the fire being run

is not the one associated with the ldmx-sw that resides in that

directory.

We can make sure you have access to ldmx-sw by trying to have ldmx-sw's core program fire

printout its help message.

denv fire

This should look something like

$ denv fire

Usage: fire {configuration_script.py} [arguments to configuration script]

configuration_script.py (required) python script to configure the processing

arguments (optional) passed to configuration script when run in python

Using Legacy Versions of ldmx-sw

Using Legacy Versions of ldmx-sw

Versions of ldmx-sw that were built with a version of the development image before 4.2.2 (generaly pre-v4 ldmx-sw) do not have some ease-of-use updates to enable running ldmx-sw from within a denv.

One can still use a denv without much effort. In short, you must update the denv shell's profile.

denv exit 0 # make sure a template .profile is created

Add the following lines to the end of .profile that is in your working

directory (not the .profile in your home directory if you have one).

# add ldmx-sw and ldmx-analysis installs to the various paths

# LDMX_SW_INSTALL is defined when building the production image or users

# can use it to specify a non-normal install location

if [ -z "${LDMX_SW_INSTALL+x}" ]; then

if [ -z "${LDMX_BASE+x}" ]; then

printf "[ldmx-env-init.sh] WARNING: %s\n" \

"Neither LDMX_BASE nor LDMX_SW_INSTALL is defined." \

"At least one needs to be defined to ensure a working installation."

fi

export LDMX_SW_INSTALL="${LDMX_BASE}/ldmx-sw/install"

fi

export LD_LIBRARY_PATH="${LDMX_SW_INSTALL}/lib:${LD_LIBRARY_PATH}"

export PYTHONPATH="${LDMX_SW_INSTALL}/python:${LDMX_SW_INSTALL}/lib:${PYTHONPATH}"

export PATH="${LDMX_SW_INSTALL}/bin:${PATH}"

This is just a short term solution and needs to be done on every computer. Updating to more recent ldmx-sw is advised.

Running a Configuration Script

The next step now that you have access to fire is to run a specific configuration

of ldmx-sw with it!

Configuration scripts are complicated in their own right and have

been given their own chapter.

The next chapter is focused on analyzing event files that have been shared with you

(or generated with a config shared with you) since, generally, analyzing events is

done before development of the configs that produce those events.

Analyzing ldmx-sw Event Files

Often when first starting on LDMX, people are given a .root file produced by ldmx-sw

(or some method for producing their own file). This then leads to the very next

and reasonable question -- how to analyze the data in this file?

Many answers to this question have been said and many of them are functional. In subsequent sections of this chapter of the website, I choose to focus on highlighting two possible analysis workflows that I personally like (for different reasons).

I am not able to fully cover all of the possible different types of analysis, so I am writing this guide in the context of one of the most common analyses: looking at a histogram. This type of analysis can be broken into four steps.

- Load data: from a data file, load the information in that file into memory.

- Data Manipulation: from the data already present, calculate the variable that you would like to put into the histogram.

- Histogram Filling: define how the histogram should be binned and fill the histogram with the variable that you calculated.

- Plotting: from the definition of the histogram and its content, draw the histogram in a visual manner for inspection.

The software that is used to do each of these steps is what mainly separates the different analysis workflows, so I am first going to mention various software tools that are helpful for one or more of these steps. I have separated these tools into two "ecosystems", both of which are popularly used within HEP.

| Purpose | ROOT | scikit-hep |

|---|---|---|

| Load Data | TFile,TTree | uproot |

| Manipulate Data | C++ Code | Vectorized Python with awkward |

| Fill Histograms | TH1* | hist,boost_histogram |

| Plot Histograms | TBrowser, TCanvas | matplotlib,mplhep |

How one mixes and matches these tools is a personal choice, especially since the LDMX collaboration has not landed on a widely agreed-upon method for analysis. With this in mind, I find it important to emphasize that the following subsections are examples of analysis workflows -- a specific analyzer can choose to mix and match the tools however they like.

Caveat

While I (Tom Eichlersmith) have focused on two potential analysis workflows, I do not mean to claim that I have tried all of them and these two are "the best". I just mean to say that I have drifted to these two analysis workflows over time as I've looked for easier and better methods of analyzing data. If you have an analysis workflow that you would like to share, add another subsection to this chapter of the website!

Using Python (Efficiently)

Python is slow. I can't deny it. But Python, for better or for worse, has taken over the scientific programming ecosystem meaning there are a large set of packages that are open source, professionally maintained, and perform the common tasks that scientists want to do.

One aspect of Python that has enabled its widespread adoption

(I think) is the relative ease of writing packages in another

programming language. This means that most of our analysis

code will not be using "pure Python", but instead be using

compiled languages (C++ mostly) hidden behind some convenience

wrappers. This is the strategy of many popular Python packages

numpy, scipy, and the HEP-specific hist and awkward.

My biggest tip for any new Python analyzers is to avoid writing

the for loop. As mentioned, Python itself is slow, so only

write for if you know you are only looping over a small number

of things (e.g. looping over the five different plots you want

to make). We can avoid for by using these packages with compiled

languages under-the-hood. The syntax of writing these "vectorized"

operations is often complicated to grasp, but it is concise, precise,

and performant.

With those introductory ramblings out of the way, let's get started.

Set Up

The packages we use (and the python ecosystem in general) evolves relatively fast. For this reason, it will be helpful to use a newer python version such that you can access the newer versions of the packages. Personally, I use denv to pick new python without needing to compile it and then have my installed packages for that python be isolated to within my working directory.

While you can install the packages I'll be using directly, they (and a few other helpful

ones) are availabe withing the scikit-hep metapackage allowing a quick and simple

installation command. In addition, Jupyter Lab offers an excellent way to see

plots while your constructing them and recieve other feedback from your code in an

immediate way (via a REPL

for those interested).

cd work-area

denv init python:3.11

denv pip install scikit-hep jupyterlab

In addition, you will need some data to play with. I generated the input events.root

file using an ldmx-sw simulation, but I am using common event objects that exist in

most (if not all) event files, so this example should work with others.

Generate Event File with ldmx-sw

Generate Event File with ldmx-sw

Again, I used denv to pin the ldmx-sw version and generate the data file.

denv init ldmx/pro:v3.3.6 eg-gen

cd eg-gen

denv fire config.py

mv events.root path/to/work-area/

where config.py is the following python configuration script.

from LDMX.Framework import ldmxcfg

p = ldmxcfg.Process('test')

from LDMX.SimCore import simulator as sim

mySim = sim.simulator( "mySim" )

mySim.setDetector( 'ldmx-det-v14-8gev', True )

from LDMX.SimCore import generators as gen

mySim.generators = [ gen.single_8gev_e_upstream_tagger() ]

mySim.beamSpotSmear = [20.,80.,0.]

mySim.description = 'Basic test Simulation'

p.sequence = [ mySim ]

p.run = 1

p.maxEvents = 10000

p.outputFiles = [ 'events.root' ]

import LDMX.Ecal.EcalGeometry

import LDMX.Ecal.ecal_hardcoded_conditions

import LDMX.Hcal.HcalGeometry

import LDMX.Ecal.digi as ecal_digi

p.sequence = [

mySim,

ecal_digi.EcalDigiProducer(),

ecal_digi.EcalRecProducer()

]

Launch a jupyter lab with

denv jupyter lab

open the link in your browser and create a new notebook to get started.

Notebooks consist of "cells" which

can contain "markdown" (a way to write write formatted text), code that will be executed, and raw text.

The rest of these sections are the the result of exporting a jupyter notebook (a *.ipynb file)

into "markdown" and then including it in this documentation here.

# load the python modules we will use

import uproot # for data loading

import awkward as ak # for data manipulation

import hist # for histogram filling (and some plotting)

import matplotlib as mpl # for plotting

import matplotlib.pyplot as plt # common shorthand

import mplhep # style of plots

%matplotlib inline

mpl.style.use(mplhep.style.ROOT) # set the plot style

Load Data

The first step to any analysis is loading the data.

For this step in this analysis workflow, we are going to use the uproot package

to load the data into awkward arrays in memory.

Some helpful links to find more details on this

%%time

# while the uproot file is open, conver the 'LDMX_Events' tree into in-memory arrays

with uproot.open('events.root') as f:

events = f['LDMX_Events'].arrays()

# show the events array

events

CPU times: user 3.63 s, sys: 220 ms, total: 3.85 s

Wall time: 3.85 s

Printout of `events` array

[{'SimParticles_test.first': [1, 2, 3, ..., 48, 49], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 61, 62], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 11, 12], ...},

{'SimParticles_test.first': [1, 2, ..., 15, 1199], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 8, 9], ...},

{'SimParticles_test.first': [1, 2, ..., 118, 119], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 10, 11], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 10, 11], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 7, 61], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 10, 11], ...},

...,

{'SimParticles_test.first': [1, 2, 3, ..., 9, 10], ...},

{'SimParticles_test.first': [1, 2, ..., 126, 127], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 9, 10], ...},

{'SimParticles_test.first': [1, 2, ..., 85, 4702], ...},

{'SimParticles_test.first': [1, 2, ..., 72, 6976], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 8, 93], ...},

{'SimParticles_test.first': [1, 2, ..., 7120], ...},

{'SimParticles_test.first': [1, 2, ..., 7921], ...},

{'SimParticles_test.first': [1, 2, 3, ..., 8, 9], ...}]

---------------------------------------------------------------------

type: 10000 * {

"SimParticles_test.first": var * int32,

"SimParticles_test.second.energy_": var * float64,

"SimParticles_test.second.pdgID_": var * int32,

"SimParticles_test.second.genStatus_": var * int32,

"SimParticles_test.second.time_": var * float64,

"SimParticles_test.second.x_": var * float64,

"SimParticles_test.second.y_": var * float64,

"SimParticles_test.second.z_": var * float64,

"SimParticles_test.second.endX_": var * float64,

"SimParticles_test.second.endY_": var * float64,

"SimParticles_test.second.endZ_": var * float64,

"SimParticles_test.second.px_": var * float64,

"SimParticles_test.second.py_": var * float64,

"SimParticles_test.second.pz_": var * float64,

"SimParticles_test.second.endpx_": var * float64,

"SimParticles_test.second.endpy_": var * float64,

"SimParticles_test.second.endpz_": var * float64,

"SimParticles_test.second.mass_": var * float64,

"SimParticles_test.second.charge_": var * float64,

"SimParticles_test.second.daughters_": var * var * int32,

"SimParticles_test.second.parents_": var * var * int32,

"SimParticles_test.second.processType_": var * int32,

"SimParticles_test.second.vertexVolume_": var * string,

"TaggerSimHits_test.id_": var * int32,

"TaggerSimHits_test.layerID_": var * int32,

"TaggerSimHits_test.moduleID_": var * int32,

"TaggerSimHits_test.edep_": var * float32,

"TaggerSimHits_test.time_": var * float32,

"TaggerSimHits_test.px_": var * float32,

"TaggerSimHits_test.py_": var * float32,

"TaggerSimHits_test.pz_": var * float32,

"TaggerSimHits_test.energy_": var * float32,

"TaggerSimHits_test.x_": var * float32,

"TaggerSimHits_test.y_": var * float32,

"TaggerSimHits_test.z_": var * float32,

"TaggerSimHits_test.pathLength_": var * float32,

"TaggerSimHits_test.trackID_": var * int32,

"TaggerSimHits_test.pdgID_": var * int32,

"RecoilSimHits_test.id_": var * int32,

"RecoilSimHits_test.layerID_": var * int32,

"RecoilSimHits_test.moduleID_": var * int32,

"RecoilSimHits_test.edep_": var * float32,

"RecoilSimHits_test.time_": var * float32,

"RecoilSimHits_test.px_": var * float32,

"RecoilSimHits_test.py_": var * float32,

"RecoilSimHits_test.pz_": var * float32,

"RecoilSimHits_test.energy_": var * float32,

"RecoilSimHits_test.x_": var * float32,

"RecoilSimHits_test.y_": var * float32,

"RecoilSimHits_test.z_": var * float32,

"RecoilSimHits_test.pathLength_": var * float32,

"RecoilSimHits_test.trackID_": var * int32,

"RecoilSimHits_test.pdgID_": var * int32,

"HcalSimHits_test.id_": var * int32,

"HcalSimHits_test.edep_": var * float32,

"HcalSimHits_test.x_": var * float32,

"HcalSimHits_test.y_": var * float32,

"HcalSimHits_test.z_": var * float32,

"HcalSimHits_test.time_": var * float32,

"HcalSimHits_test.trackIDContribs_": var * var * int32,

"HcalSimHits_test.incidentIDContribs_": var * var * int32,

"HcalSimHits_test.pdgCodeContribs_": var * var * int32,

"HcalSimHits_test.edepContribs_": var * var * float32,

"HcalSimHits_test.timeContribs_": var * var * float32,

"HcalSimHits_test.nContribs_": var * uint32,

"HcalSimHits_test.pathLength_": var * float32,

"HcalSimHits_test.preStepX_": var * float32,

"HcalSimHits_test.preStepY_": var * float32,

"HcalSimHits_test.preStepZ_": var * float32,

"HcalSimHits_test.preStepTime_": var * float32,

"HcalSimHits_test.postStepX_": var * float32,

"HcalSimHits_test.postStepY_": var * float32,

"HcalSimHits_test.postStepZ_": var * float32,

"HcalSimHits_test.postStepTime_": var * float32,

"HcalSimHits_test.velocity_": var * float32,

"EcalSimHits_test.id_": var * int32,

"EcalSimHits_test.edep_": var * float32,

"EcalSimHits_test.x_": var * float32,

"EcalSimHits_test.y_": var * float32,

"EcalSimHits_test.z_": var * float32,

"EcalSimHits_test.time_": var * float32,

"EcalSimHits_test.trackIDContribs_": var * var * int32,

"EcalSimHits_test.incidentIDContribs_": var * var * int32,

"EcalSimHits_test.pdgCodeContribs_": var * var * int32,

"EcalSimHits_test.edepContribs_": var * var * float32,

"EcalSimHits_test.timeContribs_": var * var * float32,

"EcalSimHits_test.nContribs_": var * uint32,

"EcalSimHits_test.pathLength_": var * float32,

"EcalSimHits_test.preStepX_": var * float32,

"EcalSimHits_test.preStepY_": var * float32,

"EcalSimHits_test.preStepZ_": var * float32,

"EcalSimHits_test.preStepTime_": var * float32,

"EcalSimHits_test.postStepX_": var * float32,

"EcalSimHits_test.postStepY_": var * float32,

"EcalSimHits_test.postStepZ_": var * float32,

"EcalSimHits_test.postStepTime_": var * float32,

"EcalSimHits_test.velocity_": var * float32,

"TargetSimHits_test.id_": var * int32,

"TargetSimHits_test.edep_": var * float32,

"TargetSimHits_test.x_": var * float32,

"TargetSimHits_test.y_": var * float32,

"TargetSimHits_test.z_": var * float32,

"TargetSimHits_test.time_": var * float32,

"TargetSimHits_test.trackIDContribs_": var * var * int32,

"TargetSimHits_test.incidentIDContribs_": var * var * int32,

"TargetSimHits_test.pdgCodeContribs_": var * var * int32,

"TargetSimHits_test.edepContribs_": var * var * float32,

"TargetSimHits_test.timeContribs_": var * var * float32,

"TargetSimHits_test.nContribs_": var * uint32,

"TargetSimHits_test.pathLength_": var * float32,

"TargetSimHits_test.preStepX_": var * float32,

"TargetSimHits_test.preStepY_": var * float32,

"TargetSimHits_test.preStepZ_": var * float32,

"TargetSimHits_test.preStepTime_": var * float32,

"TargetSimHits_test.postStepX_": var * float32,

"TargetSimHits_test.postStepY_": var * float32,

"TargetSimHits_test.postStepZ_": var * float32,

"TargetSimHits_test.postStepTime_": var * float32,

"TargetSimHits_test.velocity_": var * float32,

"TriggerPad1SimHits_test.id_": var * int32,

"TriggerPad1SimHits_test.edep_": var * float32,

"TriggerPad1SimHits_test.x_": var * float32,

"TriggerPad1SimHits_test.y_": var * float32,

"TriggerPad1SimHits_test.z_": var * float32,

"TriggerPad1SimHits_test.time_": var * float32,

"TriggerPad1SimHits_test.trackIDContribs_": var * var * int32,

"TriggerPad1SimHits_test.incidentIDContribs_": var * var * int32,

"TriggerPad1SimHits_test.pdgCodeContribs_": var * var * int32,

"TriggerPad1SimHits_test.edepContribs_": var * var * float32,

"TriggerPad1SimHits_test.timeContribs_": var * var * float32,

"TriggerPad1SimHits_test.nContribs_": var * uint32,

"TriggerPad1SimHits_test.pathLength_": var * float32,

"TriggerPad1SimHits_test.preStepX_": var * float32,

"TriggerPad1SimHits_test.preStepY_": var * float32,

"TriggerPad1SimHits_test.preStepZ_": var * float32,

"TriggerPad1SimHits_test.preStepTime_": var * float32,

"TriggerPad1SimHits_test.postStepX_": var * float32,

"TriggerPad1SimHits_test.postStepY_": var * float32,

"TriggerPad1SimHits_test.postStepZ_": var * float32,

"TriggerPad1SimHits_test.postStepTime_": var * float32,

"TriggerPad1SimHits_test.velocity_": var * float32,

"TriggerPad2SimHits_test.id_": var * int32,

"TriggerPad2SimHits_test.edep_": var * float32,

"TriggerPad2SimHits_test.x_": var * float32,

"TriggerPad2SimHits_test.y_": var * float32,

"TriggerPad2SimHits_test.z_": var * float32,

"TriggerPad2SimHits_test.time_": var * float32,

"TriggerPad2SimHits_test.trackIDContribs_": var * var * int32,

"TriggerPad2SimHits_test.incidentIDContribs_": var * var * int32,

"TriggerPad2SimHits_test.pdgCodeContribs_": var * var * int32,

"TriggerPad2SimHits_test.edepContribs_": var * var * float32,

"TriggerPad2SimHits_test.timeContribs_": var * var * float32,

"TriggerPad2SimHits_test.nContribs_": var * uint32,

"TriggerPad2SimHits_test.pathLength_": var * float32,

"TriggerPad2SimHits_test.preStepX_": var * float32,

"TriggerPad2SimHits_test.preStepY_": var * float32,

"TriggerPad2SimHits_test.preStepZ_": var * float32,

"TriggerPad2SimHits_test.preStepTime_": var * float32,

"TriggerPad2SimHits_test.postStepX_": var * float32,

"TriggerPad2SimHits_test.postStepY_": var * float32,

"TriggerPad2SimHits_test.postStepZ_": var * float32,

"TriggerPad2SimHits_test.postStepTime_": var * float32,

"TriggerPad2SimHits_test.velocity_": var * float32,

"TriggerPad3SimHits_test.id_": var * int32,

"TriggerPad3SimHits_test.edep_": var * float32,

"TriggerPad3SimHits_test.x_": var * float32,

"TriggerPad3SimHits_test.y_": var * float32,

"TriggerPad3SimHits_test.z_": var * float32,

"TriggerPad3SimHits_test.time_": var * float32,

"TriggerPad3SimHits_test.trackIDContribs_": var * var * int32,

"TriggerPad3SimHits_test.incidentIDContribs_": var * var * int32,

"TriggerPad3SimHits_test.pdgCodeContribs_": var * var * int32,

"TriggerPad3SimHits_test.edepContribs_": var * var * float32,

"TriggerPad3SimHits_test.timeContribs_": var * var * float32,

"TriggerPad3SimHits_test.nContribs_": var * uint32,

"TriggerPad3SimHits_test.pathLength_": var * float32,

"TriggerPad3SimHits_test.preStepX_": var * float32,

"TriggerPad3SimHits_test.preStepY_": var * float32,

"TriggerPad3SimHits_test.preStepZ_": var * float32,

"TriggerPad3SimHits_test.preStepTime_": var * float32,

"TriggerPad3SimHits_test.postStepX_": var * float32,

"TriggerPad3SimHits_test.postStepY_": var * float32,

"TriggerPad3SimHits_test.postStepZ_": var * float32,

"TriggerPad3SimHits_test.postStepTime_": var * float32,

"TriggerPad3SimHits_test.velocity_": var * float32,

"EcalScoringPlaneHits_test.id_": var * int32,

"EcalScoringPlaneHits_test.layerID_": var * int32,

"EcalScoringPlaneHits_test.moduleID_": var * int32,

"EcalScoringPlaneHits_test.edep_": var * float32,

"EcalScoringPlaneHits_test.time_": var * float32,

"EcalScoringPlaneHits_test.px_": var * float32,

"EcalScoringPlaneHits_test.py_": var * float32,

"EcalScoringPlaneHits_test.pz_": var * float32,

"EcalScoringPlaneHits_test.energy_": var * float32,

"EcalScoringPlaneHits_test.x_": var * float32,

"EcalScoringPlaneHits_test.y_": var * float32,

"EcalScoringPlaneHits_test.z_": var * float32,

"EcalScoringPlaneHits_test.pathLength_": var * float32,

"EcalScoringPlaneHits_test.trackID_": var * int32,

"EcalScoringPlaneHits_test.pdgID_": var * int32,

"HcalScoringPlaneHits_test.id_": var * int32,

"HcalScoringPlaneHits_test.layerID_": var * int32,

"HcalScoringPlaneHits_test.moduleID_": var * int32,

"HcalScoringPlaneHits_test.edep_": var * float32,

"HcalScoringPlaneHits_test.time_": var * float32,

"HcalScoringPlaneHits_test.px_": var * float32,

"HcalScoringPlaneHits_test.py_": var * float32,

"HcalScoringPlaneHits_test.pz_": var * float32,

"HcalScoringPlaneHits_test.energy_": var * float32,

"HcalScoringPlaneHits_test.x_": var * float32,

"HcalScoringPlaneHits_test.y_": var * float32,

"HcalScoringPlaneHits_test.z_": var * float32,

"HcalScoringPlaneHits_test.pathLength_": var * float32,

"HcalScoringPlaneHits_test.trackID_": var * int32,

"HcalScoringPlaneHits_test.pdgID_": var * int32,

"TargetScoringPlaneHits_test.id_": var * int32,

"TargetScoringPlaneHits_test.layerID_": var * int32,

"TargetScoringPlaneHits_test.moduleID_": var * int32,

"TargetScoringPlaneHits_test.edep_": var * float32,

"TargetScoringPlaneHits_test.time_": var * float32,

"TargetScoringPlaneHits_test.px_": var * float32,

"TargetScoringPlaneHits_test.py_": var * float32,

"TargetScoringPlaneHits_test.pz_": var * float32,

"TargetScoringPlaneHits_test.energy_": var * float32,

"TargetScoringPlaneHits_test.x_": var * float32,

"TargetScoringPlaneHits_test.y_": var * float32,

"TargetScoringPlaneHits_test.z_": var * float32,

"TargetScoringPlaneHits_test.pathLength_": var * float32,

"TargetScoringPlaneHits_test.trackID_": var * int32,

"TargetScoringPlaneHits_test.pdgID_": var * int32,

"TrigScintScoringPlaneHits_test.id_": var * int32,

"TrigScintScoringPlaneHits_test.layerID_": var * int32,

"TrigScintScoringPlaneHits_test.moduleID_": var * int32,

"TrigScintScoringPlaneHits_test.edep_": var * float32,

"TrigScintScoringPlaneHits_test.time_": var * float32,

"TrigScintScoringPlaneHits_test.px_": var * float32,

"TrigScintScoringPlaneHits_test.py_": var * float32,

"TrigScintScoringPlaneHits_test.pz_": var * float32,

"TrigScintScoringPlaneHits_test.energy_": var * float32,

"TrigScintScoringPlaneHits_test.x_": var * float32,

"TrigScintScoringPlaneHits_test.y_": var * float32,

"TrigScintScoringPlaneHits_test.z_": var * float32,

"TrigScintScoringPlaneHits_test.pathLength_": var * float32,

"TrigScintScoringPlaneHits_test.trackID_": var * int32,

"TrigScintScoringPlaneHits_test.pdgID_": var * int32,

"TrackerScoringPlaneHits_test.id_": var * int32,

"TrackerScoringPlaneHits_test.layerID_": var * int32,

"TrackerScoringPlaneHits_test.moduleID_": var * int32,

"TrackerScoringPlaneHits_test.edep_": var * float32,

"TrackerScoringPlaneHits_test.time_": var * float32,

"TrackerScoringPlaneHits_test.px_": var * float32,

"TrackerScoringPlaneHits_test.py_": var * float32,

"TrackerScoringPlaneHits_test.pz_": var * float32,

"TrackerScoringPlaneHits_test.energy_": var * float32,

"TrackerScoringPlaneHits_test.x_": var * float32,

"TrackerScoringPlaneHits_test.y_": var * float32,

"TrackerScoringPlaneHits_test.z_": var * float32,

"TrackerScoringPlaneHits_test.pathLength_": var * float32,

"TrackerScoringPlaneHits_test.trackID_": var * int32,

"TrackerScoringPlaneHits_test.pdgID_": var * int32,

"MagnetScoringPlaneHits_test.id_": var * int32,

"MagnetScoringPlaneHits_test.layerID_": var * int32,

"MagnetScoringPlaneHits_test.moduleID_": var * int32,

"MagnetScoringPlaneHits_test.edep_": var * float32,

"MagnetScoringPlaneHits_test.time_": var * float32,

"MagnetScoringPlaneHits_test.px_": var * float32,

"MagnetScoringPlaneHits_test.py_": var * float32,

"MagnetScoringPlaneHits_test.pz_": var * float32,

"MagnetScoringPlaneHits_test.energy_": var * float32,

"MagnetScoringPlaneHits_test.x_": var * float32,

"MagnetScoringPlaneHits_test.y_": var * float32,

"MagnetScoringPlaneHits_test.z_": var * float32,

"MagnetScoringPlaneHits_test.pathLength_": var * float32,

"MagnetScoringPlaneHits_test.trackID_": var * int32,

"MagnetScoringPlaneHits_test.pdgID_": var * int32,

channelIDs_: var * uint32,

samples_: var * uint32,

numSamplesPerDigi_: uint32,

sampleOfInterest_: uint32,

version_: int32,

"EcalRecHits_test.id_": var * int32,

"EcalRecHits_test.amplitude_": var * float32,

"EcalRecHits_test.energy_": var * float32,

"EcalRecHits_test.time_": var * float32,

"EcalRecHits_test.xpos_": var * float32,

"EcalRecHits_test.ypos_": var * float32,

"EcalRecHits_test.zpos_": var * float32,

"EcalRecHits_test.isNoise_": var * bool,

eventNumber_: int32,

run_: int32,

"timestamp_.fSec": int32,

"timestamp_.fNanoSec": int32,

weight_: float64,

isRealData_: bool,

"intParameters_.first": var * string,

"intParameters_.second": var * int32,

"floatParameters_.first": var * string,

"floatParameters_.second": var * float32,

"stringParameters_.first": var * string,

"stringParameters_.second": var * string

}

Oh wow, that's a lot of branches! While this printout can be overwhelming, I'd encourage you

to look through it. Each branch is named and contains the number in each event

(var is used if the number varies) and the type of object (string, float32, bool to name

a few). This printout is very helpful for familiarizing yourself with an awkward array and its structure

so don't be shy to look at it and scroll through it!

Manipulate Data

This is probably the most complicated part not least because I'm not sure what analysis you actually want to do.

As an example, and to show the power of "vectorization", I am going to calculate the total energy reconstructed within the ECal.

These lines are often short but incredibly dense. Do not be afraid to try a few out and see the results, changing the input axis,

as well as other branches of events. While it can be difficult to find the right expression to do the manipulation you wish,

trying different expressions is an incredibly quick procedure and one that I would enourage you to do. Instead of assigning

the result to a variable, you can even just print it directly to the console like events above so you can see the resulting

shape and a few example values.

awkward's website is growing with documentation and has a detailed reference of the available commands.

Nevertheless, you can find more help on numpy's website whose syntax and vocabulary is a main inspiration for awkward.

Specifically, I would guide folks to What is NumPy and the

NumPy Beginners Guide to be given helpful definitions of the relevant vocabulary

("vectorization", "array", "axis", "attribute" to name a few).

%%time

# add up the energy_ along axis 1, axis 0 is the "outermost" axis which is indexed by event

total_ecal_rec_energy = ak.sum(events['EcalRecHits_test.energy_'], axis=1)

CPU times: user 5.99 ms, sys: 2 ms, total: 7.99 ms

Wall time: 7.34 ms

Fill Histogram

Filling a histogram is where we condense a variable calculated for many events into an object that can be viewed and interpreted.

While numpy and matplotlib have histogram filling

and plotting that work well. The hist package gives

a more flexible definition of histogram, allowing you to define histograms with more axes, do calculations with histograms

(e.g. sum over bins, rebin, slicing, projection), and eventually plot them.

We won't go into multi-dimensional (more than one axis) histograms or histogram calcuations here,

but the links below give a helpful overview of available options.

- hist

- hist indexing (the stuff you can put between square brackets)

%%time

# construct the histogram defining a Regular (Reg) binning

# I found this binning after trying a few options

tere_h = hist.Hist.new.Reg(160,0,16,label='Total Reconstructed Energy in ECal [GeV]').Double()

# fill the histogram with the passed array

# I'm dividing by 1000 here to convert the MeV to GeV

tere_h.fill(total_ecal_rec_energy/1000.)

CPU times: user 1.18 ms, sys: 44 µs, total: 1.23 ms

Wall time: 1.26 ms

Double() Σ=10000.0

Plot Histogram

The final step is converting our histogram into an image that is helpful for us humans! hist connects to the standard matplotlib through a styling package called mplhep. Both of which have documentation sites that are helpful to browse so you can gain an idea of the available functions.

- matplotlib is used extensively inside and outside HEP, I often find an immediate answer with a simple internet search (e.g. searching

plt change aspect ratiotells you how to change the aspect ratio of your plot). - mplhep is a wrapper around matplotlib for HEP to do common things like plot histograms.

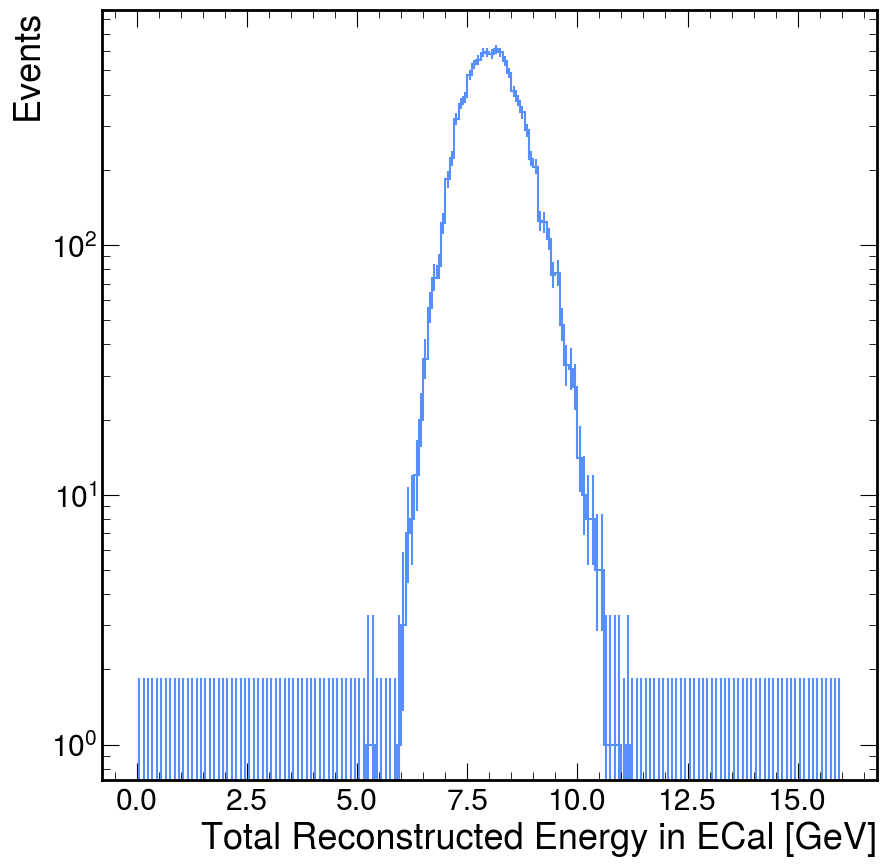

tere_h.plot()

plt.yscale('log')

plt.ylabel('Events')

plt.show()

Round-and-Round We Go

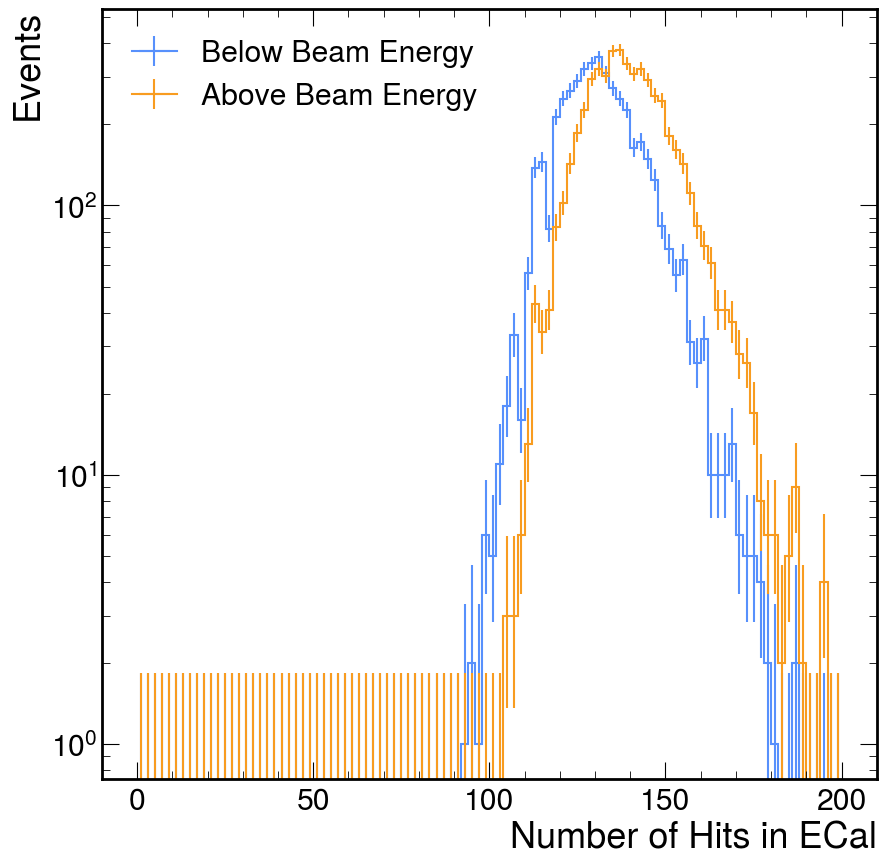

Now, in almost all of my analyses, one plot just immediately opens the door to new questions. For example, I notice that this total energy plot is rather broad, I want to see why that is. One idea is that it is simply a function of how many hits we observed in the ECal. There are many ways to test this hypothesis, but I'm going to do a specific method that showcases a specific tool that is extremely useful: boolean slicing (awkward's implementation is inspired by numpy's boolean slicing but it also interacts with the idea of broadcasting between arrays).

I also showcase a helpful feature of hist where if the first axis is a StrCategory, then

the resulting plot will just overlay the plots along the other axis.

You'll notice that, while I'm manipulating the data, filling a histogram, and plotting the histogram, I do not need to re-load the data. This is by design. Jupyter Lab (and the notebooks it interacts with) use an interactive Python "kernel" to hold variables in memory while you are using them. This is helpful to avoid the time-heavy job of loading data into memory, but it can be an area of confusion. Be careful to not re-use variable names and create new notebooks often!

nhits_by_tere_h = (

hist.Hist.new

.StrCategory(['Below Beam Energy','Above Beam Energy'])

.Reg(100,0,200,label='Number of Hits in ECal')

.Double()

)

n_ecal_hits = ak.count(events['EcalRecHits_test.id_'], axis=1)

nhits_by_tere_h.fill(

'Below Beam Energy',

n_ecal_hits[total_ecal_rec_energy < 8000.]

)

nhits_by_tere_h.fill(

'Above Beam Energy',

n_ecal_hits[total_ecal_rec_energy > 8000.]

)

nhits_by_tere_h.plot()

plt.yscale('log')

plt.ylabel('Events')

plt.legend()

plt.show()

Using ldmx-sw Directly

As you may be able to pick up based on my tone, I prefer the (efficient) python analysis

method given before using uproot, awkward, and hist. Nevertheless, there are two

main reasons I've had for putting an analysis into the ldmx-sw C++.

- Longevity: While the python ecosystem evolving quickly is helpful for obtaining new features, it can also cause long-running projects to "break" -- forcing a refactor that is purely due to upstream code changes.1 Writing an analysis into C++, with its more rigid view on backwards compatibility, essentially solidifies it so that it can be run in the same way for a long time into the future.

- Non-Vectorization: In the previous chapter, I made a big deal about how most

analyses can be vectorizable and written in terms of these pre-compiled and fast

functions. That being said, sometimes its difficult to figure out how to write

an analysis in a vectorizable form. Dropping down into the C++ allows analyzers

to write the

forloop themselves which may be necessary for an analysis to be understandable (or even functional).

This longevity issue can be resolved within python by "pinning" package versions so that all developers of the analysis code use the same version of python and the necessary packages. Nevertheless, this "pinning" also prevents analyzers from obtaining new features or bug fixes brought into newer versions of the packages.

The following example is stand-alone in the same sense as the prior chapter's jupyter notebook. It can be run from outside of the ldmx-sw repository; however, this directly contradicts the first reason for writing an analysis like this in the first place. I would recommend that you store your analyzer source code in ldmx-sw or the private ldmx-analysis. These repos also contain CMake infrastructure so you can more easily share code common amongst many processors.

Set Up

I am going to use the same version of ldmx-sw that was used to generate

the input events.root file. This isn't strictly necessary - more often

than not, newer ldmx-sw versions are able to read files from prior ldmx-sw

versions.

This tutorial uses a feature introduced into ldmx-sw in v4.0.1. One can follow the same general workflow with some additional changes to the config script described below.

cd work-area

denv init ldmx/pro:<ldmx-sw-version>

where <ldmx-sw-version> is a version of ldmx-sw that has been released. The version of ldmx-sw you want to use depends on what features

you need and input data files you want to analyze.

The following code block shows the necessary boilerplate for starting

a C++ analyzer running with ldmx-sw.

// filename: MyAnalyzer.cxx

#include "Framework/EventProcessor.h"

#include "Ecal/Event/EcalHit.h"

class MyAnalyzer : public framework::Analyzer {

public:

MyAnalyzer(const std::string& name, framework::Process& p)

: framework::Analyzer(name, p) {}

~MyAnalyzer() override = default;

void onProcessStart() override;

void analyze(const framework::Event& event) override;

};

void MyAnalyzer::onProcessStart() {

// this is where we will define the histograms we want to fill

}

void MyAnalyzer::analyze(const framework::Event& event) {

// this is where we will fill the histograms

}

DECLARE_ANALYZER(MyAnalyzer);

And below is an example python config called ana-cfg.py that I will

use to run this analyzer with fire. It assumes to be in the same

place as the source file so that it knows where the files it needs

are located.

from LDMX.Framework import ldmxcfg

p = ldmxcfg.Process('ana')

p.sequence = [ ldmxcfg.Analyzer.from_file('MyAnalyzer.cxx', needs=['Ecal_Event']) ]

p.inputFiles = [ 'events.root' ]

p.histogramFile = 'hist.root'

Unable to Load Library

Unable to Load Library

If you see an error like the one below, you are probably not linking

your stand-alone processor to its necessary libraries.

The configuration script needs to be updated with needs listing

the ldmx-sw libraries that the stand-alone processor needs to be

linked to.

For example, this tutorial uses the Ecal hits defined in the

Ecal/Event area which means we need to add 'Ecal_Event' to

the needs list.

Example error you could see...

$ denv fire ana-cfg.py

---- LDMXSW: Loading configuration --------

Processor source file /home/tom/code/ldmx/website/src/using/analysis/MyAnalyzer.cxx is newer than its compiled library

/home/tom/code/ldmx/website/src/using/analysis/libMyAnalyzer.so (or library does not exist), recompiling...

done compiling /home/tom/code/ldmx/website/src/using/analysis/MyAnalyzer.cxx

---- LDMXSW: Configuration load complete --------

---- LDMXSW: Starting event processing --------

Warning in <TClass::Init>: no dictionary for class pair<int,ldmx::SimParticle> is available

Warning in <TClass::Init>: no dictionary for class ldmx::SimParticle is available

Warning in <TClass::Init>: no dictionary for class ldmx::SimTrackerHit is available

Warning in <TClass::Init>: no dictionary for class ldmx::SimCalorimeterHit is available

Warning in <TClass::Init>: no dictionary for class ldmx::HgcrocDigiCollection is available

Warning in <TClass::Init>: no dictionary for class ldmx::EcalHit is available

Warning in <TClass::Init>: no dictionary for class ldmx::CalorimeterHit is available

... proceeds to seg fault on getEnergy ...

A quick test can show that the code is compiling and running (although it will not print out anything or create any files).

$ denv time fire ana-cfg.py

---- LDMXSW: Loading configuration --------

Processor source file /home/tom/code/ldmx/website/ldmx-sw-eg/MyAnalyzer.cxx is newer than its compiled library /home/tom/code/ldmx/website/ldmx-sw-eg/libMyAnalyzer.so (or library does not exist), recompiling...

done compiling /home/tom/code/ldmx/website/ldmx-sw-eg/MyAnalyzer.cxx

---- LDMXSW: Configuration load complete --------

---- LDMXSW: Starting event processing --------

---- LDMXSW: Event processing complete --------

7.79user 0.74system 0:08.55elapsed 99%CPU (0avgtext+0avgdata 601516maxresident)k

264inputs+2480outputs (0major+223914minor)pagefaults 0swaps

MyAnalyzer needed to be compiled which increased the time it took to run.

Re-running again without changing the source file allows us to skip this compilation step.

$ denv time fire ana-cfg.py

---- LDMXSW: Loading configuration --------

---- LDMXSW: Configuration load complete --------

---- LDMXSW: Starting event processing --------

---- LDMXSW: Event processing complete --------

3.52user 0.14system 0:03.66elapsed 100%CPU (0avgtext+0avgdata 325828maxresident)k

0inputs+0outputs (0major+58137minor)pagefaults 0swaps

Notice that we still took a few seconds to run.

This is because, even though MyAnalyzer isn't doing anything with the data,

fire is still looping through all of the events.

You do not need to include time when running.

I am just using it in this tutorial to give you a sense of how long

different commands run.

Load Data, Manipulate Data, and Fill Histograms

While these steps were separate in the previous workflow, they all share the same process

in this workflow. The data loading is handled by fire and we are expected to write the

code that manipulates the data and fills the histograms.

I'm going to look at the total reconstructed energy in the ECal again. Below is the updated

code file. Notice that, besides the additional #include at the top, all of the changes were

made within the function definitions.

// filename: MyAnalyzer.cxx

#include "Framework/EventProcessor.h"

#include "Ecal/Event/EcalHit.h"

class MyAnalyzer : public framework::Analyzer {

public:

MyAnalyzer(const std::string& name, framework::Process& p)

: framework::Analyzer(name, p) {}

~MyAnalyzer() override = default;

void onProcessStart() override;

void analyze(const framework::Event& event) override;

};

void MyAnalyzer::onProcessStart() {

/**

* In v4.5.1 ldmx-sw and earlier, forgetting `getHistoDirectory()`

* led to silently not creating any histograms.

* In v4.5.2 ldmx-sw and newer, it can be left out but it does

* no harm if left in.

*/

getHistoDirectory();

histograms_.create(

"total_ecal_rec_energy",

"Total ECal Rec Energy [GeV]", 160, 0.0, 16.0

);

}

void MyAnalyzer::analyze(const framework::Event& event) {

const auto& ecal_rec_hits{event.getCollection<ldmx::EcalHit>("EcalRecHits","")};

double total = 0.0;

for (const auto& hit : ecal_rec_hits) {

total += hit.getEnergy();

}

histograms_.fill("total_ecal_rec_energy", total/1000.);

}

DECLARE_ANALYZER(MyAnalyzer);

Look at the documentation of the HistogramPool

to see more examples of how to create and fill histograms.

In order to run this code on the data, we need to compile and run the program.

The special from_file function within the config script handles this in

most situations for us.

Below, you'll see that the analyzer is re-compiled into the library while

fire is loading the configuration and then the analyzer is used during event processing.

$ denv fire ana-cfg.py

---- LDMXSW: Loading configuration --------

Processor source file /home/tom/code/ldmx/website/ldmx-sw-eg/MyAnalyzer.cxx is newer than its compiled library /home/tom/code/ldmx/website/ldmx-sw-eg/libMyAnalyzer.so (or library does not exist), recompiling...

done compiling /home/tom/code/ldmx/website/ldmx-sw-eg/MyAnalyzer.cxx

---- LDMXSW: Configuration load complete --------

---- LDMXSW: Starting event processing --------

---- LDMXSW: Event processing complete --------

Now there is a new file hist.root in this directory which has the histogram we filled stored within it.

$ denv rootls -l hist.root

TDirectoryFile Apr 30 22:30 2024 MyAnalyzer "MyAnalyzer"

$ denv rootls -l hist.root:*

TH1F Apr 30 22:30 2024 MyAnalyzer_total_ecal_rec_energy ""

In v4.5.2 of ldmx-sw, the histogram pooling was changed to avoid referencing the name of the analyzer in both the directory and the histogram itself. Before this release, the histogram would have been located at "MyAnalyzer/MyAnalyzer_total_ecal_rec_energy" but if you are using this release or newer, the same histogram would be located at "MyAnalyzer/total_ecal_rec_energy".

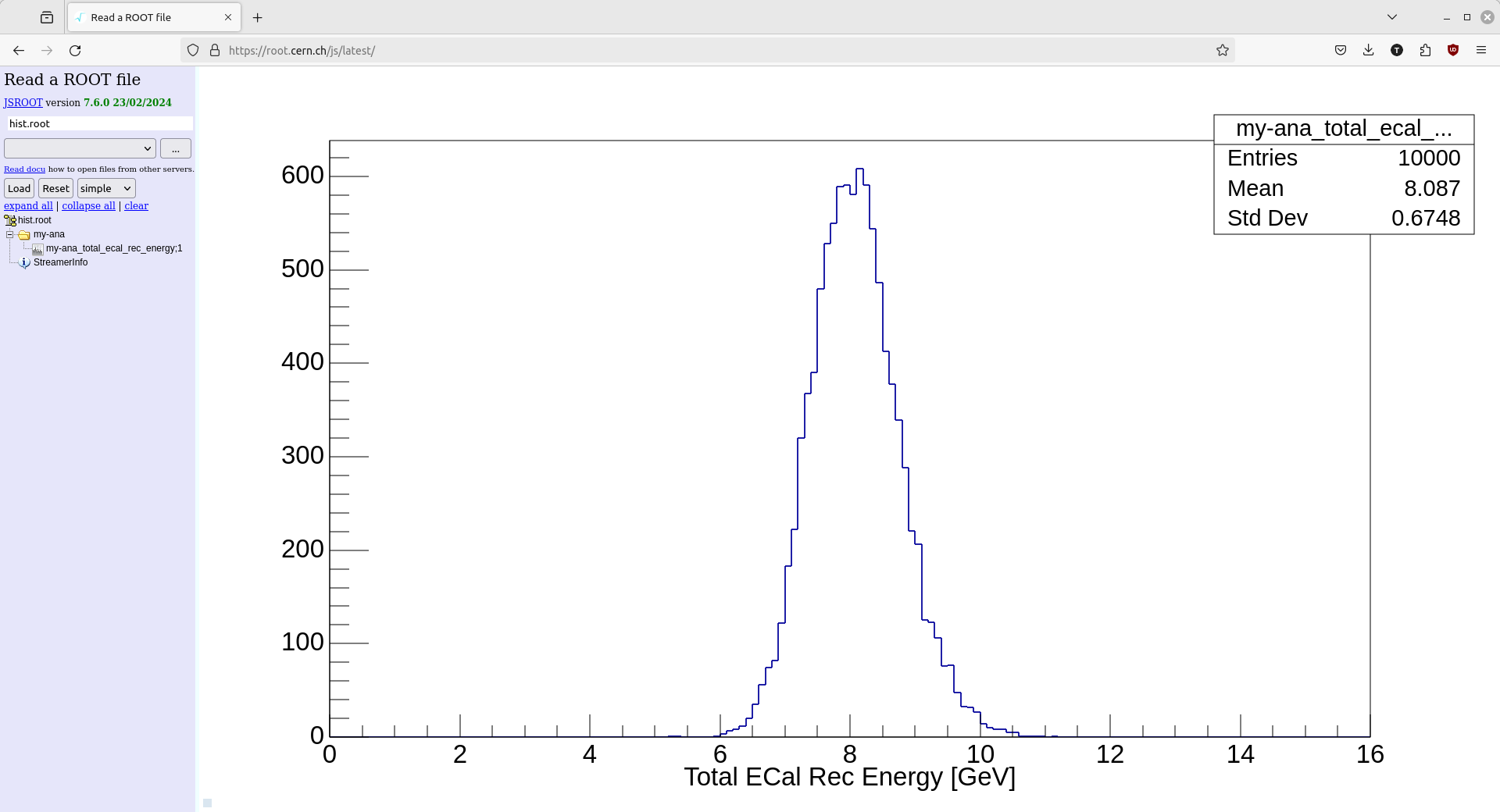

Plotting Histograms

Viewing the histogram with the filled data is another fork in the road.

I often switch back to the Jupyter Lab approach using uproot to load histograms from hist.root

into hist objects that I can then manipulate and plot with hist and matplotlib.

Accessing Histograms in Jupyter Lab

Accessing Histograms in Jupyter Lab

I usually hold the histogram file handle created by uproot and then use the to_hist() function

once I find the histogram I want to plot in order to pull the histogram into an object I am

familiar with. For example

f = uproot.open('hist.root')

h = f['MyAnalyzer/MyAnalyzer_total_ecal_rec_energy'].to_hist()

h.plot()

But it is also very common to view these histograms with one of ROOT's browsers.

There is a root browser within the dev images (via denv rootbrowse or ldmx rootbrowse),

but I also like to view the histogram with the online ROOT browser.

Below is a screenshot of my browser window after opening the hist.root file and then

selecting the histogram we filled.

Pre-4.0.1 Standalone Analyzers

PR #1310 goes into detail on the necessary changes, but - in large part - we can mimic the feature introduced in v4.0.1 with some additional python added into the config file.

The file below hasn't been thoroughly tested and may not work out-of-the-box. Please open a PR into this documentation if you find an issue that can be patched generally.

from pathlib import Path

class StandaloneAnalyzer:

def __init__(self, instance_name, class_name):

self.instanceName = instance_name

self.className = class_name

self.histograms = []

@classmethod

def from_file(cls, source_file):

if not isinstance(source_file, Path):

source_file = Path(source_file)

if not source_file.is_file():

raise ValueError(f'{source_file} is not accessible.')

src = source_file.resolve()

# assume class name is name of file (no extension) if not provided

class_name = src.stem

instance_name = class_name

lib = src.parent / f'lib{src.stem}.so'

if not lib.is_file() or src.stat().st_mtime > lib.stat().st_mtime:

print(

f'Processor source file {src} is newer than its compiled library {lib}'

' (or library does not exist), recompiling...'

)

import subprocess

# update this path to the location of the ldmx-sw install

# this is the correct path for production images

ldmx_sw_install_prefix = '/usr/local'

# for dev images, you could look at using

#ldmx_sw_install_prefix = f'{os.environ["LDMX_BASE"]}/ldmx-sw/install'

subprocess.run([

'g++', '-fPIC', '-shared', # construct a shared library for dynamic loading

'-o', str(lib), str(src), # define output file and input source file

'-lFramework', # link to Framework library (and the event dictionary)

'-I/usr/local/include/root', # include ROOT's non-system headers

f'-I{ldmx_sw_install_prefix}/include', # include ldmx-sw headers

f'-L{ldmx_sw_install_prefix}/lib', # include ldmx-sw libs

], check=True)

print(f'done compiling {src}')

ldmxcfg.Process.addLibrary(str(lib))

instance = cls(instance_name, class_name)

return instance

p = ldmxcfg.Process('ana')

import os

p.sequence = [ StandaloneAnalyzer.from_file('MyAnalysis.cxx') ]

p.inputFiles = [ 'events.root' ]

p.histogramFile = 'hist.root'

Running and Configuring fire

The fire application is used for simulation, reconstruction, and analysis of data from the LDMX experiment. The application receives its configuration for a given purpose by interpreting a python script which contains instructions about what C++ code to run and what parameters to use. The python script is not run for each event, but instead is used to determine the configuration of the C++ code which is run for each event.

Brief summary of running fire

From the commandline, the application is run as follows:

$ denv fire {configuration_script.py} [arguments for configuration script]

The configuration script language is discussed in more detail below.

Other prefixes besides denv

Other prefixes besides denv

denv was pre-dated by a set of bash functions whose root command was ldmx.

This means you can see ldmx as the main prefix for commands that

should be running within the ldmx-sw environment.

In most cases, one can simply replace ldmx with denv since they have

similar design goals.

Developers of ldmx-sw may also be using the recipe manager just and run

the above with just as the prefix. This just (pun intended) runs denv fire

under-the-hood, so it is also equivalent.

Configuration script arguments

Arguments after the .py file on the commandline will be passed to the script as normal arguments to any python script.

These can be used in many ways to adjust the behavior of the script. Some examples are given below in the Tips and Tricks section.

Processing Modes

There are two processing modes that fire can run in which are defined by the presence of input data files1.

If there are no input data files, then fire is in Production Mode while if there are input data files, then

fire is in Reconstruction Mode. The names of these two modes originate from how fire is used in LDMX research.

In this context, an "input data file" is a data file previously produced by fire. There can be other types of data that are "input" into fire but unless fire is actually treating those input files as a reference, fire is considered to be in "Production Mode". See the inputFiles configuration parameter below.

The Process Object

The Process object represents the overall processing step. It must be included in any fire configuration. When the Process is constructed, the user must provide a processing pass name. This processing pass name is used to identify the products in the data file and cannot be repeated when later processing the same file. The pass name is useful when a file has been processed multiple times -- for example, when reprocessing a file with new calibration constants.

As an example, the pass name is practice, so the line which constructs the Process is:

p = ldmxcfg.Process("practice")

Here is a list of some of the most important process options and what they control.

It is encouraged to browse the python modules themselves for all the details,

but you can also call help(ldmxcfg.Process) in python to see the documentation.

passName(string)- required Given in the constructor, tag for the process as a whole.

- For example:

"10MeVSignal"for a simulation of a 10MeV A'

run(integer)- Unique number identifying the run

- For example:

9001 - Default is

0

maxEvents(integer)- Maximum number of events to run for

- Required for Production Mode (no input files)

- In Reconstruction Mode, the number of events that are run is the number of events in the input files unless this parameter is positive and less than the input number.

- For example:

9000

outputFiles(list of strings)- List of files to output events to

- Required to be exactly one for Production Mode, and either exactly one or exactly the number of input files in Reconstruction Mode.

- For example:

[ "output.root" ]

inputFiles(list of strings)- List of files to read events in from

- Required if no output files are given

- For example:

[ "input.root" ]

histogramFile(string)- Name of file to put histograms and ntuples in

- For example:

"myHistograms.root"

sequence(list of event processors)- Required to be non-empty

- List of processors to run the events through (in the order given)

- For example:

[ mySimulator, ecalDigis, ecalRecon ]

keep(list of strings)- List of drop/keep rules to help ldmx-sw know which collections to put into output file(s)

- Slightly complicated, see the documentation of EventFile

- For example:

[ "drop .*SimHits.*" , "keep Ecal.*" ]

skimDefaultIsKeep(bool)- Should the process keep events unless told to drop by a processor?

- Default is

True: events will be kept unless processors usesetStorageHint. - Use

skimDefaultIsDrop()orskimDefaultIsSave()to modify

skimRules(list of strings)- List of processors to use to decide whether an event should be stored in the output file (along with the default given above).

- Has a very specific format, use

skimConsider( processorName )to modifyprocessorNameis the instance name and not the class name

- For example, the Ecal veto chooses whether an event should be kept or not depending on a lot of physics stuff. If you only want the events passing the veto to be saved, you would:

p.skimDefaultIsDrop() #process should drop events unless specified otherwise

p.skimConsider('ecalVeto') #process should listen to storage hints from ecalVeto

logFrequency(int)- How frequently should the processor tell you what event number it is on?

- Default is

-1which is never.

Configuration File Basics

Often times, the script that is passed to fire to configure how ldmx-sw runs and processes data is called a "config script" or even simply "config". This helps distinguish these python files from other python files who have different purposes (like filling histograms or creating plots).

There are many configs stored within ldmx-sw itself which mostly exist to help developers quickly test any code developments they write. These examples, while often complicated at first look, can be helpful for a new user wishing to learn more about how to write their own config for ldmx-sw.

.github/validation_samples/<name>/config.py: configs used in automatic PR validation tests, these have a variety of simulations as well as the full suite of emulation and reconstruction processors with ldmx-swBiasing/test/: configs used to help manually test developments in the Biasing and Simulation code, these mostly just have simulations and do not contain emulation and reconstruction processorsexampleConfigsdirectory within modules: configs written by module developers to help describe how to use the processors they are developing

In order to help guide your browsing, below I've written two example configs that will not run but they do show code snippets that are similar across a wide variety of configs.

In all configs, there will be the creation of the Process object where the name of that pass is defined and there will be some lines defining the p.sequence of processors

that will be run to process the data.

Additional notes:

- Using the variable name

pfor theProcessobject is just convention and is not strictly necessary; however, it does lend itself nicely to sharing bits of code between configs. - Most of the time, especially for processors that are already written into ldmx-sw, the construction of a processor is put into its own python module so that the C++ class name, the module name, and any other parameters can be properly spelled once and used everywhere.

Minimal Production Config

In Production Mode, there won't be a p.inputFiles line but there will be a line setting p.run to some value.

from LDMX.Framework import ldmxcfg

p = ldmxcfg.Process('mypass')

p.outputFiles = ['output_events.root']

p.run = 1

p.sequence = [

ldmxcfg.Producer('my_producer','some::cpp::MyProducer','Module')

]

Minimal Reconstruction Config

In Reconstruction Mode, there will be a p.inputFiles line but since p.run will be ignored it is often omitted.

from LDMX.Framework import ldmxcfg

p = ldmxcfg.Process('myreco')

p.inputFiles = ['input_events.root']

p.outputFiles = ['rereco_input_events.root']

p.sequence = [

ldmxcfg.Producer('my_reco', 'some::cpp::MyReco', 'Module')

]

Structure of Event Processors

In ldmx-sw, processors have a structure that overlap between C++ and python where we attempt to use the strengths of both languages.

In C++, we do all the heavy lifting and try to utilize the language's speed and efficiency.

In python, we do the complicated task of assigning values for all of the configuration parameters, regardless of type.

C++

The C++ part of the event processor is a derived class that has multiple different methods accessing different parts at different points of time along the processing chain. I am not going to go through all of those parts in detail, but I will focus on two methods that are used in almost all processors.

The configure method of your event processor is given an object called Parameters which contains all the parameters retrieved from python (more on that later).

This method is called immediately after the processor is constructed, so it is the place to set up your processor and make sure it will do what you want it to do.

The produce (for Producers) or analyze (for Analyzers) method of your event processor is given the current event object.

With this event object, you can access all of the objects that have been previously stored into it and (for Producers only)

put new event objects into it.

Here is an outline of a Producer with these two methods. This was not tested. It is just here for illustrative purposes.

// in the header file

// ldmx-sw/MyModule/include/MyModule/MyProducer.h

namespace mymodule {

/**

* We inherit from the Producer class so that

* the application can know what to do with us.

*/

class MyProducer : public Producer {

public:

/**

* Constructor

* Required to match the structure set up by Producer

*/

MyProducer(const std::string& name, Process& p) : Producer(name, p) {}

/**

* Destructor

* Marked override so that it will be called when MyProducer

* is destructed via a pointer of type Producer*

*/

~MyProducer() override = default;

/**

* Configure this instance of MyProducer

*

* We get an object storing all of the parameters set in the python.

*/

void configure(Parameters& params) override;

/**

* Produce for the input event

*

* Here is where you do all your work on an event-by-event basis.

*/

void produce(Event& event) override;

private:

/// a parameter we will get from python

int my_parameter_;

/// another parameter we will get from python

std::vector<double> my_other_parameter_;

}; // MyProducer

} // mymodule

// in the source file

// ldmx-sw/MyModule/src/MyModule/MyProducer.cxx

#include "MyModule/MyProducer.h"

namespace mymodule {

void MyProducer::configure(const Parameters& params) {

my_parameter_ = params.getParameter<int>("my_parameter");

my_other_parameter_ = params.getParameter<std::vector<double>>("my_other_parameter");

std::cout << "I also know the name "

<< params.getParameter<std::string>("instanceName")

<< " and class "

<< params.getParameter<std::string>("className")

<< " of this copy of MyProcessor" << std::endl;

}

void MyProducer::produce(Event& event) {

// insert processor's event-by-event work here

}

} // mymodule

DECLARE_PRODUCER_NS(mymodule, MyProducer);

Python

The python part of the event processor is set up to allow the user to specify the parameters the processor should use. We also define a class in python that will be accessed by the application.

The python class has three objectives:

- Define all of the parameters that the processor requires (and reasonable defaults)

- Include the library that the C++ processor is apart of

- Tell the configuration what the C++ class it links to is

Here is an outline of the python class that would go with the C++ class MyProducer above.

class MyProducer(Producer) :

"""An outline for a producer configuration in python

Parameters

----------

my_parameter : int

An example of an integer parameter

my_other_parameter : list[float]

An example of a vector of doubles parameter

"""

def __init__(self,name) :

super().__init__(

name, # unique instance name because you could have more than one copy of a processor

'cool::MyProducer', #the full name including namespaces of the C++ class

'MyModule' #the name of the module this processor is in (e.g. Ecal or Analysis)

)

# define the parameters and their defaults

# notice that the names of the parameters

# are what we look for in the C++ configure method

self.my_parameter = 5

self.my_other_parameter = [ 1. , 2. , 3. ]

Now in a configuration script you can create a configuration for MyProducer and (if you want) change some of the parameters to something other than the defaults.

# in a configuration python script

# my_producer is the python file in MyModule/python

# that contains the python class definition of MyProducer

from LDMX.MyModule import my_producer

myProd = my_producer.MyProducer('myProd')

myProd.my_parameter = 10 #will change the 5 to 10 so the C++ class will receive 10

Other Objects

This structure for Event Processors is pretty general, and actually there are other objects in the C++ application that are configured in this way: PrimaryGenerators, UserActions, ConditionsObjectProviders, DarkBremModels, BiasingOperators.

Drop Keep Rules

Bugs with Drop Keep Rules

Bugs with Drop Keep Rules

Some issues with the drop keep rules have been reported on GitHub: Issue #1297. Check there if you are having issues to see if you need to update or if there is a work-around.

By default, all data that is added to an event (via event.add within a produce function)

is written to the output data file. This is a helpful default because it allows users to

quickly and write a new config and see everything that was produced by it.

Nevertheless, it is often helpful to avoid storing specific event objects within the output file mostly because the output file is getting too large and removing certain objects can save disk space without sacrificing too much usability for physics analyses. For this purpose, our processing framework has a method for configuring which objects should be stored in the output file. This configuration is colloquially called "drop keep rules" since some objects can be "dropped" during processing (generated in memory but not written to the output file) while others can be explicitly kept (i.e. written to the output file).

The drop keep rules are written in the config as a python list of strings

# p is the ldmxcfg.Process object created earlier

p.keep = [ '<rule0>', '<rule1>', ... ]

the implementation of these rules is done in the

framework::EventFile class

specifically the addDrop function handles the deduction of these rules.

Rule Format

Each rule in the list should be a string with a single space.

decision expression

decisionis one of three strings:drop,keep, andignore.expressionis a regular expression that will be compared against the event object names

decision

As the name implies, this first string is the definition of what should happen to an event object that this rule applies to. It must exactly match one of the strings below.

drop: event objects matchingexpressionshould not be written to the output filekeep: event objects matchingexpressionshould be written to the output fileignore: event objects matchingexpressionshould not even be read in from the input file

The "ignore" decision is leftover from earlier versions of the processing framework that would load all event objects from the input file into memory for processing. Current versions of framework do not do this and so this decision is largely not needed.

Perhaps a future version of framework will fully remove this as an option after testing confirms that this behavior is not needed.

expression

This regular expression hasn't been fully tested against all the powers of regular expressions. What has been tested is basic sub-string matching and so it is advised to stay within the realm of sub-string matching.

Since we append the pass name of a process to the end of these event objects created within that

process, we expect this expression to be focused on matching the prefix of the full object name.

Thus, if an expression emph does not end in a * character, one is appended.

Ordering

The rules are applied in order and can override one another. This allows for more complicated decisions to be made. In essence, the last rule in the list whose expression matches the event object's name, that is the decision that will be applied.

I can drop all of the scoring plane hit collections except the one for the ECal.

p.keep = [

'drop .*ScoringPlane.*',

'keep EcalScoringPlane.*'

]

In a very tight disk space environment, you can drop all event objects and then only keep ones you specifically require. In general, this is not recommended (and to be honest, I'm not sure if it works).

p.keep = [

'drop .*',

'keep HcalHits.*',

'keep EcalHits.*',

]

The above would then have a file with only the Hcal and Ecal hits.

Make sure to thoroughly test run your config with the setting of p.keep to make sure that

everything you need is in the output file. It is very easy to mis-type one of these patterns

and prevent anything from being written to the output file.

Event Skimming

Another feature of our event processing framework is selecting out only certain events to be written into the output file. This is colloquially called "skimming" and is helpful for many reasons, primarily for users to be able to not waste disk space on events that are not interesting for an analysis.

Since not writing events into the output file is an inherently destructive feature, event skimming will only operate if both the config and the processors being run by the config support it. Both play a part in how the choice to keep an event is made and so they are described below.

The StorageControl class in the processing framework is what handles the event skimming decisions and the input from the config and processors.

Processor

On the C++ side of things, processors can use their protected member function setStorageHint

to "hint" to the framework what that processor wants to happen to the event. The argument

to this function is a framework::StorageControl::Hint value as well as a string that can

be used to give a more detailed "purpose".

Hints are then used to algorithmically decide if an event should be written to the output file (kept) or not (dropped). Only hints passing the "listening rules" (see below) are included in this algorithm.

Must*: "must"-style hints are dominant. If any processor provides a "must" hint, then that decision is made.MustDroptakes precendence overMustKeepif both are provided.Should*: "should"-style hints are less forceful and are just used to tally votes. A simple majority of should hints is used to make the decision if no must hints are provided.- If there is a tie (including if no hints are provided), then the default decision is made.

Config

The config script can alter which processors the framework will "listen" to when deciding what to do with an event by checking for specific processor names and (optionally) only specific "purpose" strings from that processor. In addition, the config script defines what the default decision is in the case of no hints or a tie in voting.

Listening Rules

The format of the string representing a listening rule is a bit complicated, so it is best

to just use the p.skimConsider function when defining which processors you wish to "listen"

to (or "consider") when making a skimming decision. Most commonly, a user just has one processor

that they want to make the skimming decision and in this case you can just provide the processors

name.

p.skimConsider(my_processor.instanceName)

Technically, the listening rules use regular expressions for both the processor name and the purpose, so one could get quite fancy about which processors (and purposes) are selected. This more intricate skimming method has not been well tested.

Default Decision

The default storage decision is what will be used if no processors that are being listened

to provide a hint during processing. Since the default configuration is to listen to no

processors at all, the default configuration of the default decision is to keep i.e. without

any config changes, we keep all events that are processed. Without changing this,

you could have a processor that explicity "drops" events and update the config to consider

this processor in order to run over events and drop the "uninteresting" ones

(using p.skimConsider like above).

On the other hand, we could set the default decision to drop all the events and instead have a processor specifically "keep" events that are interesting. In this case, you would want to add the following to your config.

p.skimDefaultIsDrop()

along with a p.skimConsider so that something ends up in your output event file.

This style of event dropping is geared more towards readout, reconstruction, and analysis decisions where the event is already fully created in memory and we just want to make a decision about it.

In the simulation, we also allow for events to be "aborted" in the middle of creating it so that we can end the event early and save computing resources from being wasted on an event we've decided is not interesting (for whatever reason). This style of "dropping" events is more commonly called "filtering" the simulation and is more complicated than what is described here since it operates during the Geant4 simulation.

Tips and Tricks

Command Line Arguments

Command Line Arguments

Python has an easy-to-use argument parsing library. This library can be used within an ldmx-sw configuration script in order to change the parameters you are passing to the processors or even to change which processors you use.

For example, I use the code snippet often to pass inputs to the process.

import argparse

from pathlib import Path

parser = argparse.ArgumentParser()